Does AI Take Away Your Job?

Hello Data Science and ML Folks!

Hi, – I’m sorry to say, but AI is likely coming for your job.

Before you write me off as some clickbait AI doomer, I want to explain to you why 95% of the tech community (yes, even Data Science & ML folks!) are not correctly thinking about how AI will negatively impact our future earnings potential.First up, we tend to read stories like the onebelow, but then think our own job is safe because we’re  special

special .

.

You can accuse me of fear-mongering, but I’m genuinely trying to warn you about what’s coming down the pipeline:

I’m here to warn you that you might be making non-optimal career decisions today, because you’re underestimating the risk AI poses for 2 big reasons:

• Sapien Centrism

• Linear Brains

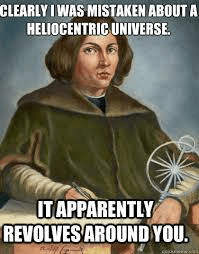

The Universe Revolves Around You. We Homo Sapiens have a long track-record of sapien centrism, where we elevate our uniqueness and role in the universe. It was only 450 years ago that the Western world thought Earth was the center of the universe, and that the Sun.and stars revolved around us.

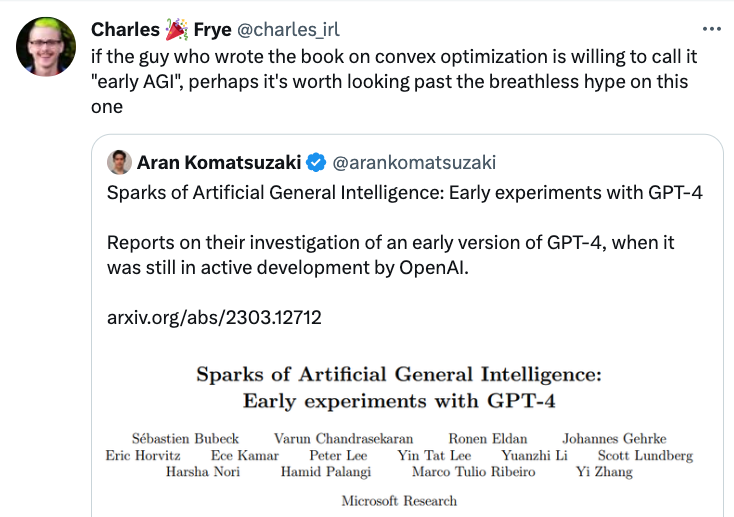

In the 1960s, the consensus view was that computers couldn’t ever beat a top chess player, because our brains were just so special. But recent improvements in AI have shown us this that playing games like Chess and Go, doing creative tasks like art and poetry, and problem solving in math and coding aren’t skills only for humans. Just look at some of GPT’s recent test scores to see what I mean:

Yet most people remain in denial, clinging to some hope that humanity has some magic pixie dust that makes us uniquely intelligent and creative. Most people aren’t willing to acknowledge just how creative GPT-4 already is:

Faced with the insane accomplishments of LLMs, there’s still enough techies who’ll trivialize GPT’s accomplishments and say it’s just a “stochastic parrot” using statistics to repeat and copy things from the internet. These same techies fail to remember that much of what we humans do, like Software Engineering, already relies on copy-pasting code from StackOverflow:

Then there’s another group of people who see shortcomings in today’s GPT & LLM models, and gleefully conclude:

“SEE! We humans are special

because computers can’t do X”.

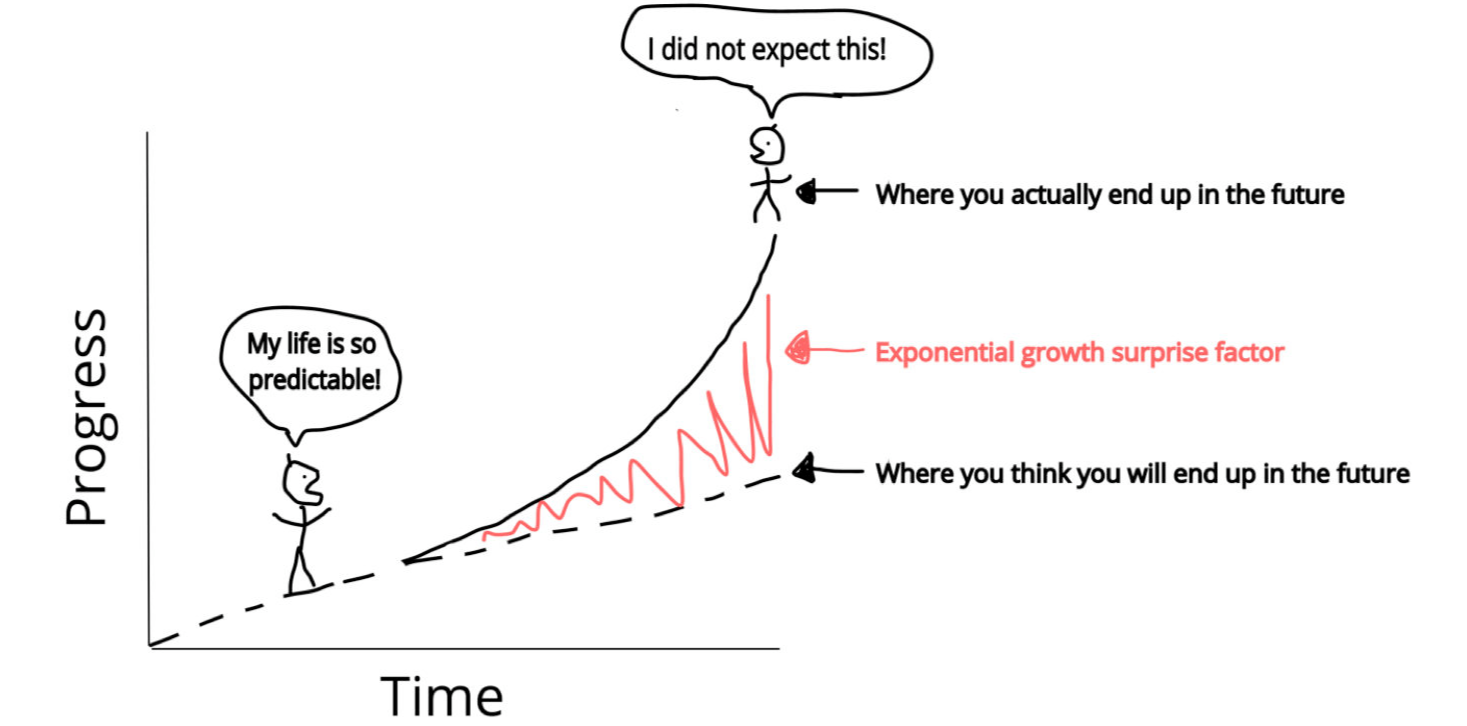

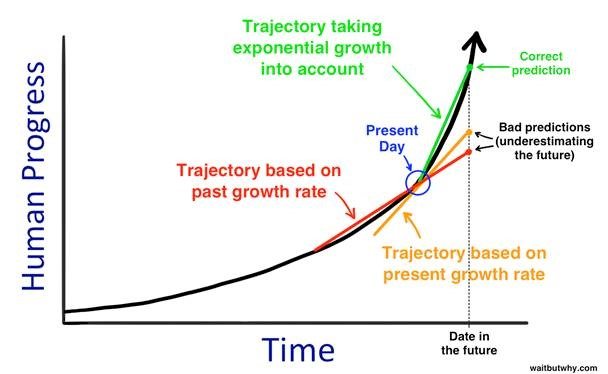

I think this nit-picky group suffers from a phenomenon I call linear brains. Linear Brains Livin’ In A Exponential World

Homo Sapiens evolved during a 550,000 year period when things were mostly stagnant, relative to today’s changing world. I mean look at just how long it us to invent farming, stone tools, and writing:

Our brains evolved during a comparatively “linear” period of time, where technological progress was infrequent, slow, and mostly “linear”. As such, we’re terrible at reasoning about exponential processes.

But we need to realize that the internet is only 40 years old (1983), and we’re in the very early openings for tech/computing. Looking at the progress we’ve had just in the past few years when it comes to AI, and realize we’re growing at an exponential rate. But even with an acknowledgment of the exponential rate-of-progress, thanks to our linear brains, we humans STILL underpredict the effects of AI.

That’s why when people nit-pick LLM performance, and say:

“computers can’t do X”

I like to say:

“computers can’t do X YET”.

I’m never trying to argue that today’s GPT models are perfect, or that tomorrow you’ll be fired because of chatGPT. I’m concerned that given how powerful these GPT models are today, and how quickly they’re improving, using our linear brains to reason about the future effects of AI causes us to grossly underestimate this technology.

So Nick, what we do?

Because of my book Ace the Data Science Interview, data science job hunting video course, and frequent career advice posts on LinkedIn, people naturally come to me with questions like:

“How do I adapt my career/respond

to the threat of AI-related job loss??”

I’m sorry, but I don’t have any solid answers yet. I wish I could list off 37 reasons why the AI-hype is overblown and your 10-year career plan is safe, but at this moment, in good faith, I can’t articulate that.

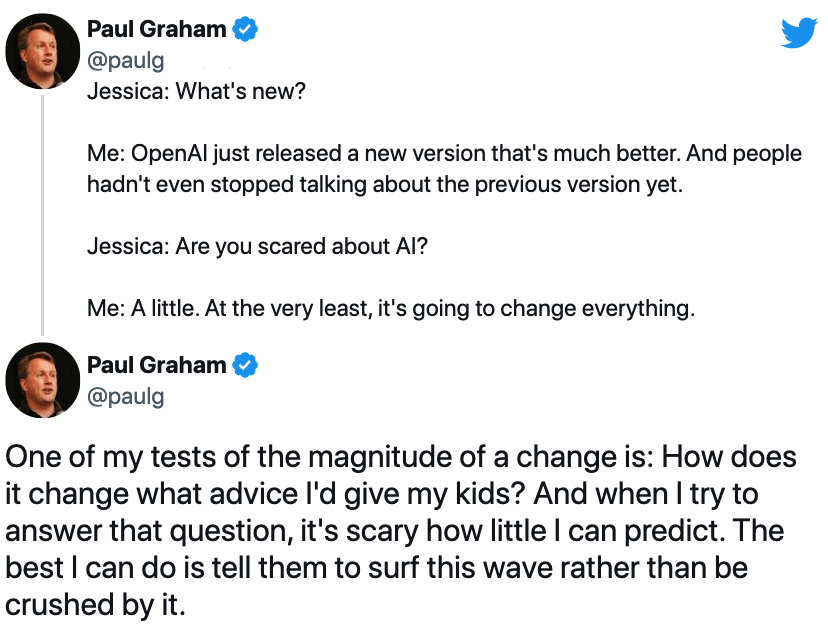

Venture Capitalist Paul Graham (founder of YCombinator) is similarly confused:

John Carmack, legendary programmer and former CTO of Oculus VR, was asked a similar question by a Computer Science student, and had this to say:

In a few weeks, I hope to round up some more actionable ideas to AI-proof our careers. In the meantime, I have a question for you:

What actions do you plan to take,

to prevent AI from taking your job?